Federated Learning with Flower: How to Train Your Model without Stealing Data

Join the Federated Forces and Learn How to Train Your Model in the Wild, Wild West of Decentralized Data!

Table of contents

Introduction

Hey there, fellow techies! Are you ready to embark on a journey into the exciting world of federated learning? Don't worry, I promise to make it as fun and easy to understand as possible. And to make things even more exciting, we'll be using the Flower framework for our examples!

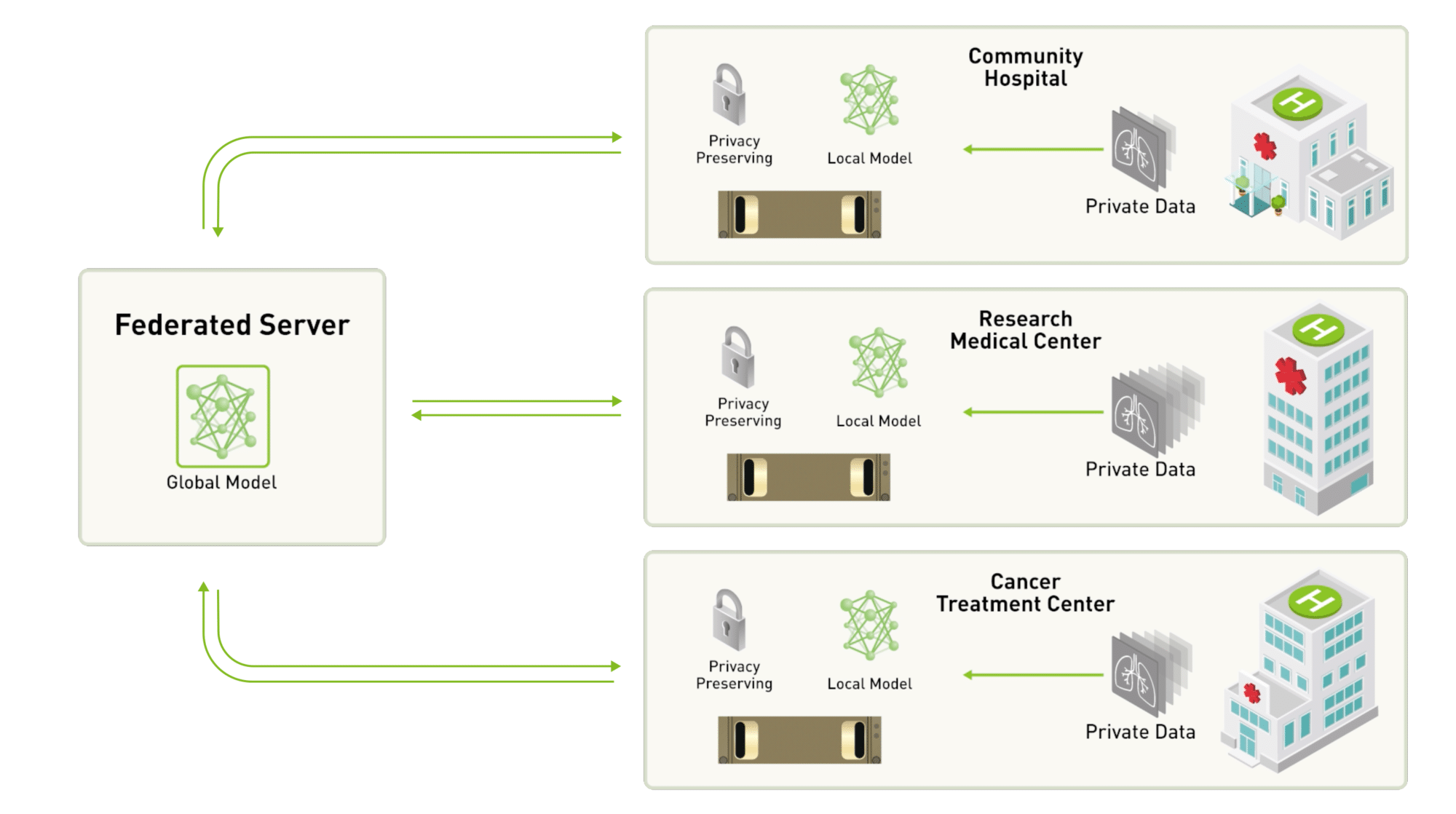

But first, let's quickly go over what federated learning is. In a nutshell, federated learning is a way of training machine learning models on decentralized data. Instead of centralizing all the data in one place, you keep the data on individual devices or servers and train the model in a distributed way.

Now, let's dive into Flower! Flower is an open-source Python framework for building federated learning systems. It provides an easy-to-use interface for implementing federated learning algorithms and protocols, as well as tools for tracking experiments and visualizing results. To get started with Flower, you'll need to install it first. You can do this by running the following command in your terminal:

pip install flower

Example

Now that you have Flower installed, let's write some code! For this example, we'll be training a simple model on a set of MNIST images using federated learning.

First, let's define our model:

import torch.nn as nn

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.fc1 = nn.Linear(784, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = x.view(-1, 784)

x = nn.functional.relu(self.fc1(x))

x = self.fc2(x)

return nn.functional.log_softmax(x, dim=1)

This is a simple two-layer neural network that takes in a 28x28 pixel image (flattened into a 784-dimensional vector) and outputs a probability distribution over the 10 possible digits.

Next, let's define our federated learning setup using Flower:

import flower as fl

class MnistClient(fl.client.NumPyClient):

def __init__(self, cid, train_data, train_labels):

self.cid = cid

self.train_data = train_data

self.train_labels = train_labels

def get_parameters(self):

return model.state_dict()

def set_parameters(self, parameters):

model.load_state_dict(parameters)

def fit(self, parameters, config):

model.load_state_dict(parameters)

optimizer = torch.optim.SGD(model.parameters(), lr=config["lr"])

data, targets = torch.from_numpy(self.train_data).float(), torch.from_numpy(self.train_labels).long()

optimizer.zero_grad()

output = model(data)

loss = nn.functional.nll_loss(output, targets)

loss.backward()

optimizer.step()

return model.state_dict(), len(data), {}

def evaluate(self, parameters, config):

model.load_state_dict(parameters)

data, targets = torch.from_numpy(self.train_data).float(), torch.from_numpy(self.train_labels).long()

output = model(data)

loss = nn.functional.nll_loss(output, targets)

pred = output.argmax(dim=1, keepdim=True)

accuracy = pred.eq(targets.view_as(pred)).sum().item() / len(data)

return len(data), {"loss": loss.item(), "accuracy": accuracy}

server = fl.server.Server(b"http://localhost:8080")

data_loaders = []

for i in range(10):

data = torchvision.datasets.MNIST("data", train=True, download=True, transform=torchvision.transforms.Compose([torchvision.transforms.ToTensor()]))

indices = data.targets == i

data.targets = data.targets[indices]

Conclusion

In conclusion, federated learning is an exciting and rapidly growing field in machine learning. With the help of Flower, it's becoming easier than ever to build federated learning systems and experiment with different algorithms and protocols. I hope this brief introduction to federated learning and Flower has inspired you to dive deeper into this fascinating field and start building your federated learning systems!