Deploy Models with Tensorflow and Docker in Production

Walkthrough to demonstrate the deployment of ML models using TensorflowServing with Docker

Problem Faced

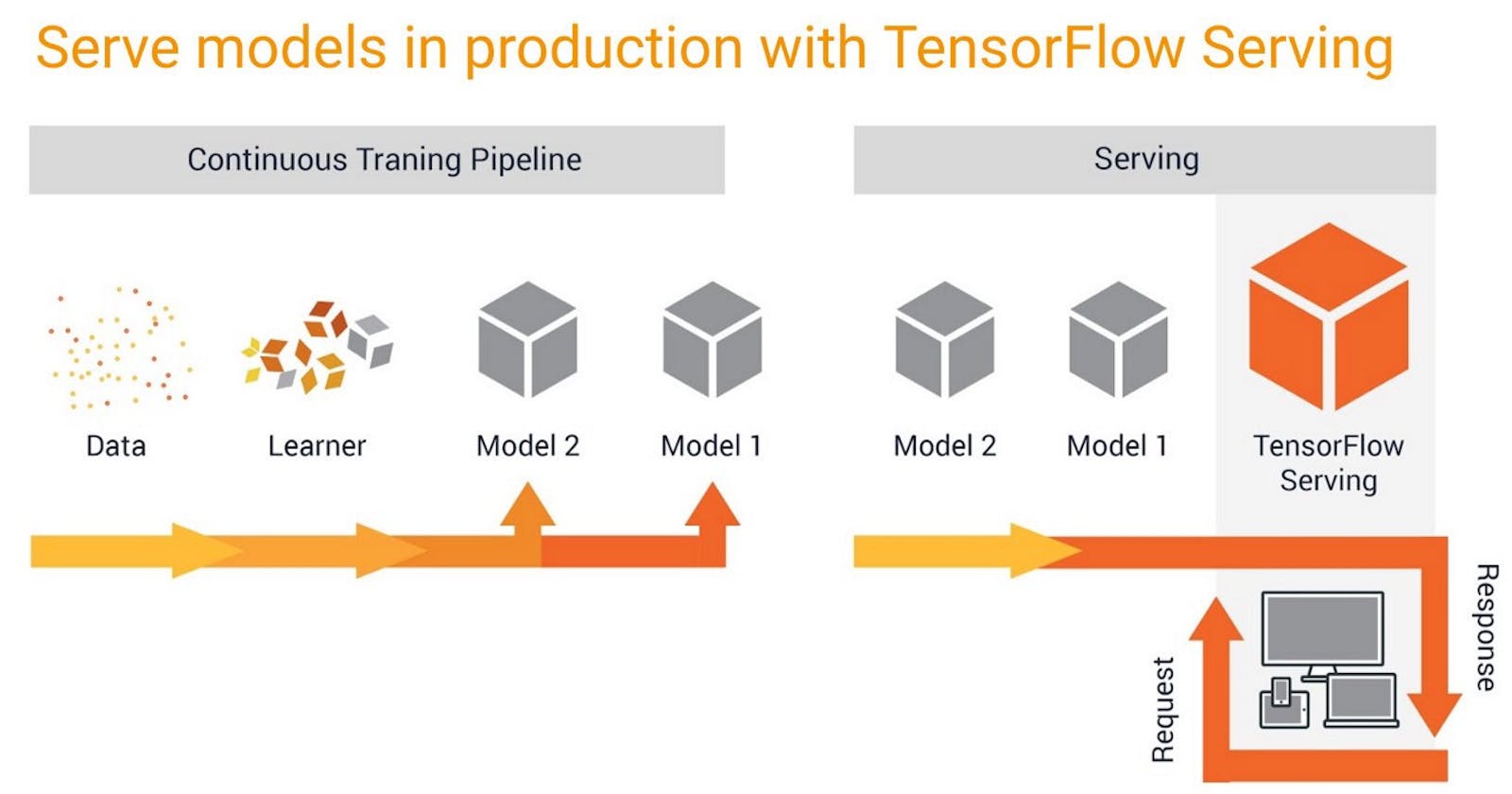

After models are trained and ready to deploy in a production environment, a lack of consistency with model deployment and serving workflows can present challenges in scaling your model deployments to meet the increasing number of ML use cases across your business.

Tensorflow Serving Introduction

For providing machine learning models in production settings, TensorFlow Serving delivers a versatile, high-performance infrastructure. Thanks to TensorFlow Serving, the same server architecture and APIs may be used while deploying new algorithms and experiments. TensorFlow Serving offers pre-built integration with TensorFlow models but is easily expandable to serve additional models and data types.

Prerequisties

Install Docker

General installation instructions are on the Docker site, but we give some quick links here:

- Docker for macOS

- Docker for Windows for Windows 10 Pro or later

Save Model

import tensorflow as tf

model = tf. keras.applications.v9g16.VG616(weights="imagenet", include _top = True)

# Freezing the weights

for layer in model. layers:

layer. trainable = False

# Saving model using Keras save function

model. save ("vgg16/1/")

Here, the Base VGG16 model is used as an example for serving

Serving with Docker

Pulling a serving image

Once you have Docker installed, you can pull the latest TensorFlow Serving docker image by running:

docker pull tensorflow/serving

Running a serving image

When the serving image runs ModelServer, it runs as follows:

tensorflow_model_server -d --port=8500 --rest_api_port=8501 \

--model_name=${MODEL_NAME} --model_base_path=${MODEL_BASE_PATH}/${MODEL_NAME}

The serving images (both CPU and GPU) have the following properties:

Port 8500exposed for gRPCPort 8501exposed for the REST API- Optional environment variable

MODEL_NAME(defaults to model) - Optional environment variable

MODEL_BASE_PATH(defaults to /models)

Now, try to run the following command to serve Vgg16 model in detached mode

sudo docker run -p 8501:8501 -p 8500:8500 --mount type=bind,source=/home/Khushiyant/Desktop/Deployment/vgg16, target/models

/V9916 -e MODEL_NAME=vgg16 -t tensorflow/serving: latest

NOTE: Trying to run the GPU model on a machine without a GPU or without a working GPU build of the TensorFlow Model Server will result in an error

How to Test for REST

import requests import json

import numpy as np import cv2

import tensorflow as tf

import time

image = cv2. imread ("image.jpg" )

image = cv2. cvtColor (image, cV2. COLOR BGR2RGB)

image = cv2. resize (image, (224,224))

image = np.expand dims (image, axis = 0)

# create inference request

start time = time. time ()

url = "http://localhost:8501/v1/models/vgg16:predict"image

data = json.dumps ({"signature name": "serving default", "instances" :image.tolist ()])

headers = {"content-type":"application/json"

response = requests.post (url, data = data, headers = headers)

prediction = json. loads (response text]["predictions"]

print (prediction)

This should print a set of values:

[[( 'n02100735', 'English setter', 0.945853472),

('no2101556', 'clumber', 0.018291885),

('n02090622', 'borzoi', 0.00679293927),

('n02088094',Afghan_hound', 0.005323024),

('n02006656', 'spoonbill', 0.00221g97637)]]