Use of Transformer Networks in Neural Imaging

Exploring the Power of Transformer Networks in Neural Imaging: How BERT is Revolutionizing the Analysis of Medical Images and Brain Function

Introduction

Neural imaging is a rapidly growing field that involves using advanced imaging techniques to study the structure and function of the brain. These techniques include magnetic resonance imaging (MRI), positron emission tomography (PET), and functional MRI (fMRI). These techniques allow researchers to understand how the brain works and how it is affected by various factors, such as disease, injury, or ageing.

Transformer Networks

One area of research in neural imaging that has gained significant attention in recent years is the use of transformer networks. Transformer networks are a type of artificial neural network that is particularly well suited for processing sequential data, such as natural language or time series data. They have been successful in a wide range of applications, including language translation, language modelling, and computer vision.

One of the key advantages of transformer networks is their ability to process long sequences of data without the need for recurrent connections, which can be computationally expensive. This makes them particularly well suited for neural imaging, where large amounts of data must be analyzed in real-time.

Bidirectional Encoder Representations from Transformers

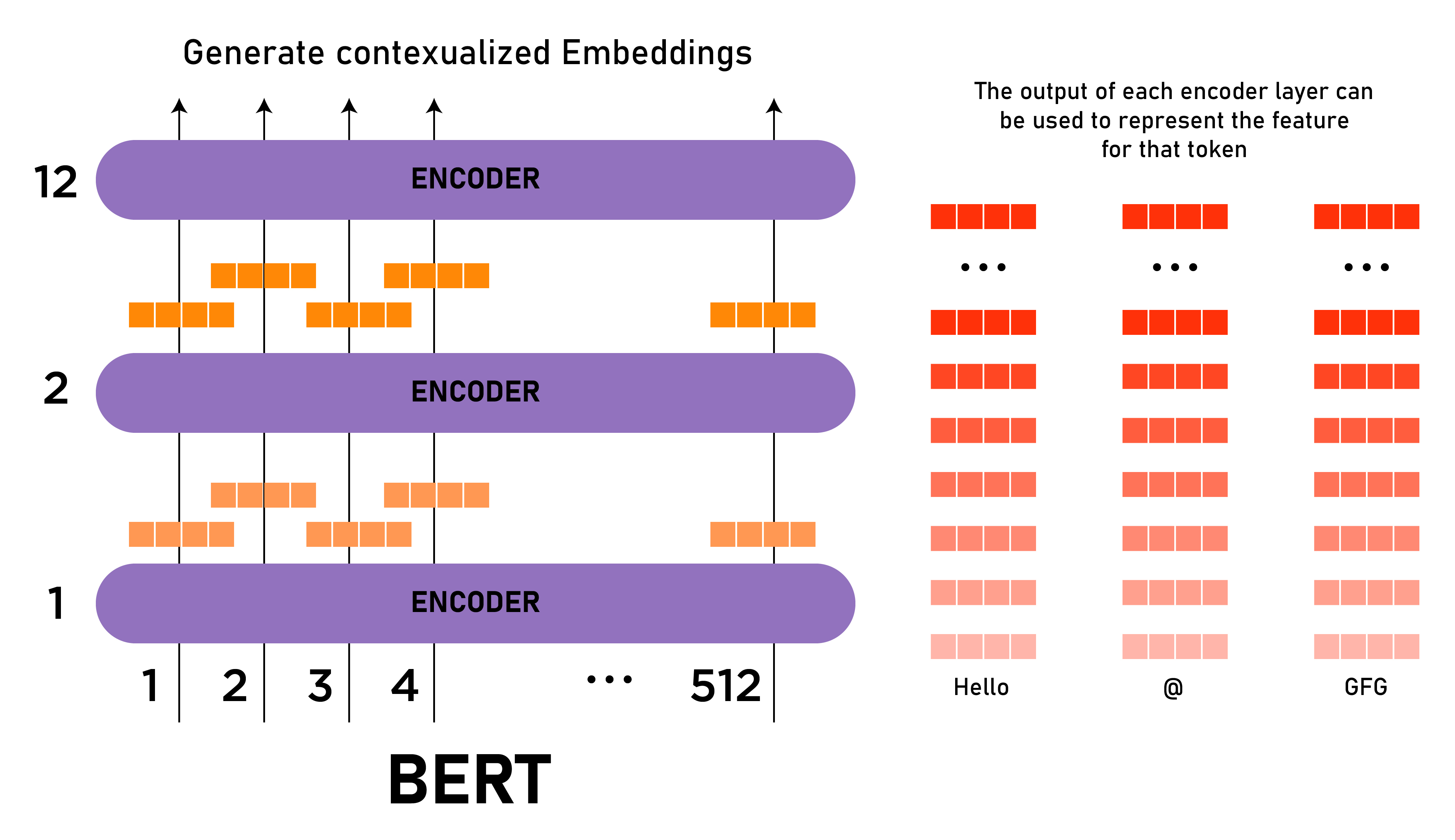

BERT, or Bidirectional Encoder Representations from Transformers, is a type of transformer-based model that has been widely used for natural language processing tasks, such as language translation, language modeling, and text classification. BERT is trained on a large corpus of text data and is able to encode the contextual relationships between words in a sentence, allowing it to understand the meaning of words in context.

Example

import torch

from transformers import BertModel, BertTokenizer

# Load the BERT model and tokenizer

model = BertModel.from_pretrained('bert-base-uncased')

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

# Encode the image data as a sequence of tokens

tokens = tokenizer.encode(image_data)

# Convert the tokens to a tensor

tokens_tensor = torch.tensor([tokens])

# Pass the tokens through the BERT model to get the encoded representation

encoded_image = model(tokens_tensor)[0]

This code will load the pre-trained BERT model and tokenizer from the 'bert-base-uncased' model. It will then encode the image data as a sequence of tokens using the tokenizer, convert the tokens to a tensor, and pass the tensor through the BERT model to get the encoded representation of the image.

One area where BERT has been applied in neural image processing is in the analysis of medical images. For example, BERT has been used to classify medical images based on their content, such as identifying abnormalities in x-ray images or detecting the presence of certain diseases in CT scans. BERT can be trained on a large dataset of annotated medical images and can then be used to classify new images based on the features it has learned.

Another example of the use of BERT in neural image processing is in the analysis of structural MRI data. Structural MRI is a type of brain imaging technique that produces detailed 3D images of the brain. BERT has been used to analyze structural MRI data to identify brain abnormalities and predict the presence of certain brain disorders.

Overall, BERT has shown great potential in the field of neural image processing, allowing researchers to analyze and classify large amounts of data quickly and accurately. Its ability to understand the context of words and phrases makes it particularly well suited for tasks involving the analysis of medical images and structural MRI data.

fMRI data

One example of the use of transformer networks in neural imaging is in the analysis of fMRI data. fMRI allows researchers to measure brain activity by tracking changes in blood flow to different regions of the brain. Transformer networks can be used to analyze the fMRI data in real-time, allowing researchers to understand how other brain regions interact and how they are affected by various factors.

PET data

Another example of the use of transformer networks in neural imaging is in the analysis of PET data. PET is a type of imaging technique that uses radioactive tracers to measure brain activity. Transformer networks can be used to analyze PET data in real time, allowing researchers to understand how different brain regions interact and how they are affected by various factors.

Conclusion

Overall, the use of transformer networks in neural imaging is a promising area of research that has the potential to revolutionize our understanding of the brain. By allowing researchers to analyze large amounts of data in real-time, transformer networks are helping to shed light on the complex mechanisms that underlie brain function and pave the way for new treatments and therapies for a wide range of brain disorders.